Efficient AI Hardwares

Co-Design Digital Accelerators

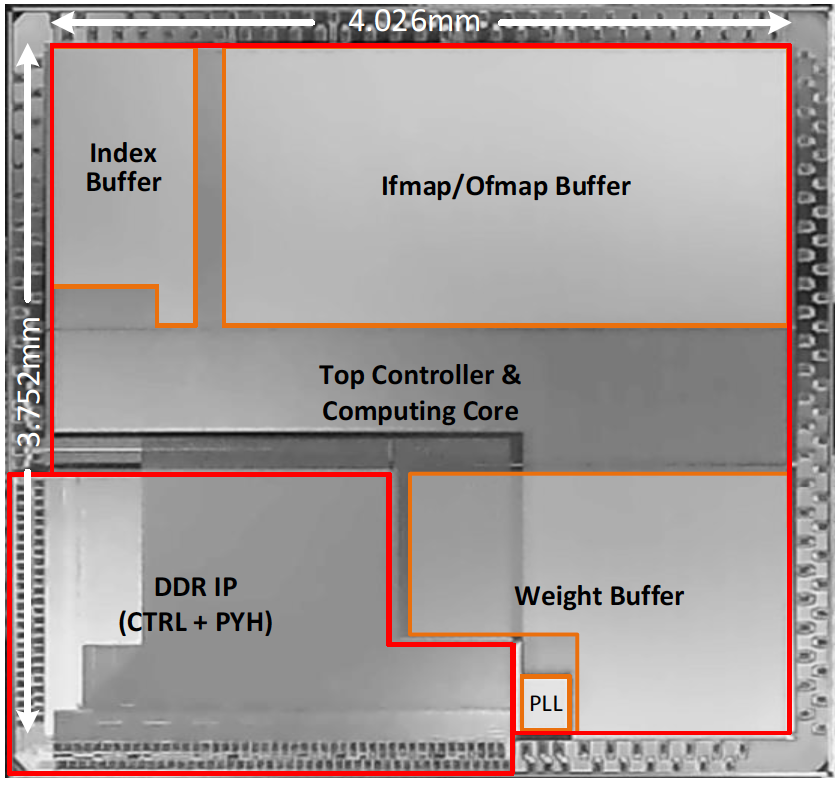

We designed a sparse CNN accelerator (AC-Codesign-v1) with a compression-hardware co-design approach, which achieves 3.73 TOPS/W under 40nm LP CMOS technology. The AC-Codesign-v1 chip enables and successfully validates a variety of applications, including head estimation, pose estimation, medical image segmentation, and ToF enhancement. The selected demos are presented in here.

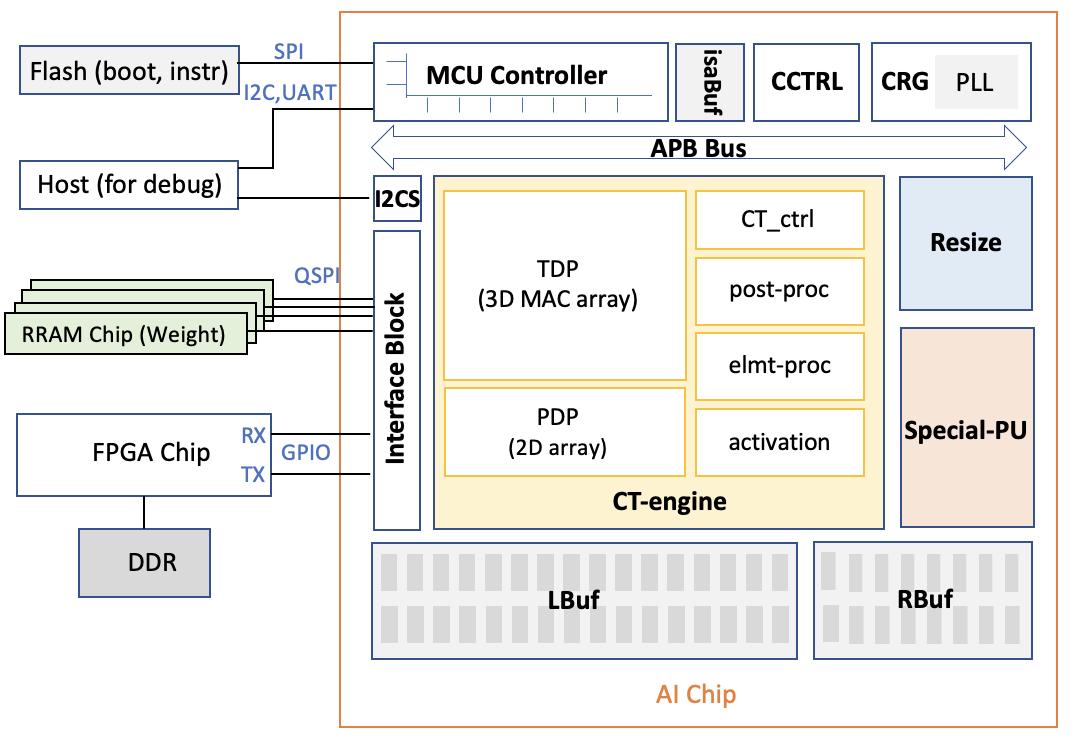

A co-design Transformer accelerator (AC-Transformer) is designed and taped-out in TSMC 28nm recently. It integrates the latest MoE sparse attention techniques with strategies tailored for dense prediction tasks, including sparse-to-dense feature map pruning and multi-chip-aware network splitting. The AC-Transformer is successfully tested on the FPGA platform which is presented in here.